The High Price of Convenience

Human Initiative Versus Digital Passivity

One of the problems with ChatGPT, along with all of the other AI models, is that you can never get exactly what you want, you just have to make do with whatever it is you are given.

My 8-year-old was working on an assignment for school in which he had to create a lengthy report on six different classes of animals: mammals, birds, reptiles, amphibians, fish, and invertebrates. Part of his assignment was to decorate the title page with artwork of his choice. He asked me for help, and so we decided to see if ChatGPT/DALL-E would give us something he could use. Trying every variation of prompt and description we could think of, we asked these AI models for a coloring book picture which contained examples of each animal category. No matter what or how we asked, we could only ever get back something that was not quite right; something was just enough “off” that we didn’t think it would work.

It turns out that ChatGPT has a propensity to sometimes generate animal likenesses that aren’t real animals at all. Such drawings give off the vibe of actual animals, but it was always the case that at least some alleged animal in the picture was fantastically deformed or off-kilter in some essential way. I began to imagine that the model’s training data was over-populated with images of the kind of unhappy genetic mutations one sometimes finds in radiation-contaminated regions after some horrible accident (e.g. Chernobyl). A crocodile body with fins instead of legs, sporting the head of a barracuda. An animal-like creature with the head of a bear but having a feline body. Amphibians sporting multiple tails. Animals with too many legs, or with a tail that merged and blended into some other appendage. At a cursory glance, the general busyness of these drawings left us with a positive impression, but whenever we looked more closely, the details were somehow always a little twisted.

In the end, we were faced with the choice of adjusting our expectations to what we were being offered by the machine, or asserting ourselves to produce, on our own, what it was that we had in mind.

And therein lies a lesson.

One of the game-changing insights exploited by the technology behemoths of the 21st century has been that billions of people will happily exchange their privacy for convenience. Now, one of the open questions where AI is concerned, is just how attached are we, really, to our own human agency? How far are we willing to go in reducing our own expectations of ourselves to obtain the comfort and convenience that AI might provide? And, as my 8-year-old and I found ourselves needing to ask, how much are we willing to lower our own expectations to accept what we’re given in lieu of what we ourselves could actually do?

Technology, when employed as a tool to augment our inherently human capacities, can be a boon to one’s agency. But when technology starts to act more like a passive/aggressive concierge - deciding for us when to reorder our supplies, or proactively adjusting the thermostat, choosing pictures that aren’t what we asked for, or telling us how to drive - even deciding when to let our cars idle and when to turn them off - something dehumanizing is going on. We are being powerfully nudged, ever more forcefully, toward passivity. Alas, passivity is to human agency what anesthesia is to consciousness. It is not necessarily out-sourcing a decision to technology, here or there, that should concern us. But the cumulative effect should give us pause.

At one point in my life, I was on the verge of death and required an improvisational major surgery which carried enormous risks. When they came in to have me sign the permission form, they rattled off all of the unhappy possibilities and what their probabilities were. The probability of any single unhappy outcome was always in the single digits. But it was the cumulative probability (I was adding up the individual numbers as they rattled them off) that I found alarming.

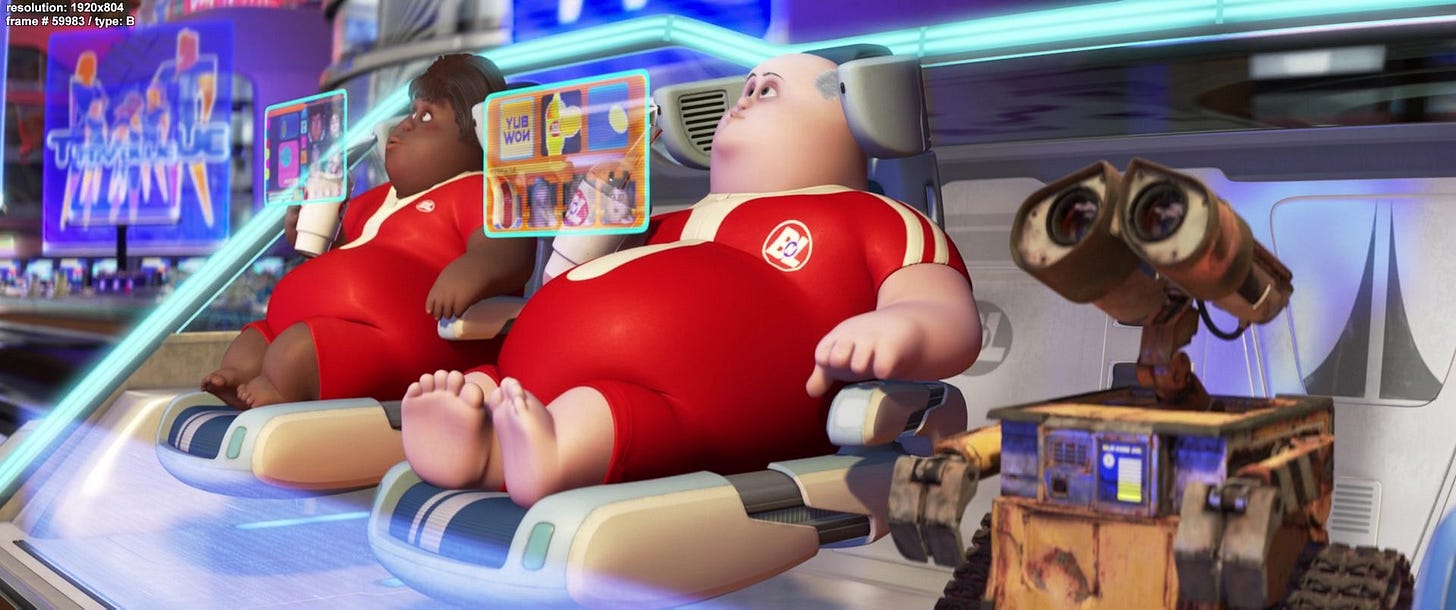

In a similar vein, I find myself suspecting that the dehumanizing effect of any single technology is generally of less concern than the cumulative effect of the many distinct, attentional, and assertive technologies which bombard our lives. By my use of the word “dehumanizing”, I mean to characterize the effect of being continuously nudged toward passivity. The cumulative effect may be degrading our natures as acting beings. At some saturation point, we will have frittered away “the dignity of causality”, to use Pascal’s framing, in exchange for a kind of anesthetized passivity. In such a world, we may belatedly discover that the real prophet of our time turns out to have been WALL-E. It wasn’t WALL-E’s much discussed vision regarding physical obesity that turns out to be, perhaps, most interesting. But, rather, it is in the movie’s prophetic vision for how technology will cultivate a world requiring little in the way of human agency, nudging us toward lives not too dissimilar from that of feedlot cattle: we will be reduced primarily to being passive consumers of food and entertainment.

It isn’t just the story-tellers of Disney who have envisioned this kind of future. Wildly popular cultural critic/historian Yuval Harari, a committed materialist and technocrat, imagines a world populated by an elite comprised of technocrats (people like himself, natch) as well as a lower class of “useless people”. He speculates that the techno-elite will manage the presence of such “useless people” by giving them food, drugs, and video games. Sort of a WALL-E meets Cheech & Chong dystopia.

Anyone in meaningful association with the elderly can already perceive the loss of agency imposed upon them by technology. Although, with many of the elderly, it isn’t due to passivity but to the often gratuitous complexity of the technology itself. Many of the elderly represent a cohort which very much wants to maintain its agency. But in many areas, their agency is being forcibly taken from them by companies and government agencies refusing any accommodations for customer engagement other than digital technology.

“I would never own a car I couldn’t repair myself!” Thus declared my grandfather, born in 1896 and who, even before there was such a thing as digital technology, nevertheless perceived that technological novelty and convenience could come at a cost to both his independence and his wallet. We may all be about to re-learn what my grandfather knew so long ago: the road to dependency is paved with technology just complex enough to exceed our grasp.

It is likely that those of us who wish to preserve our independence - to continue living lives of human initiative - will need to become more critically discerning regarding the temptations of tech-induced passivity. Each of us will almost certainly need to embrace some degree of intentional inconvenience. Any hope of sustaining free and independent lives may ultimately depend upon it.

Dang, it is so hard to write a comment on this phone. Oh well, the lengthy comment I just wrote was lost to the ethers when I tried to post, so I will end with this happy story. I have recently had the joy of using an older washing machine and dryer in my daughters new apartment. The washing machine is a top loader which has only three settings, cold , warm, and hot. Better yet, when you turn it on to it and open the lid it keeps agitating briskly so that I can throw in a washcloth or whatever whilst it is moving. I can reach in and feel the ttemperature if the water and how soapy it is while it is agitating. I LOVE that! I have been doing that on washing machines since I was eight years old. No I have not have my arm wrenches off. Lik . I apologize for any spelling errors etc in this post as I cannot see on the screen what I am writing!

Free Agency and rejection of Devine guidance will always lead to desolation. That is, the loss of self and Devine agency to a Brave New World (worth reading again).